Friday, March 4, 2011

TLRcam goes haskell

I'd like to rewrite the ray-tracer using Haskell. I'm currently taking a graphics course where I'll have to write a ray-tracer in c++, but thats not different enough. Due to the fact that a Haskell raytracer will be useless, this will be most definitely open source.

Wednesday, November 18, 2009

Fixes and a new direction

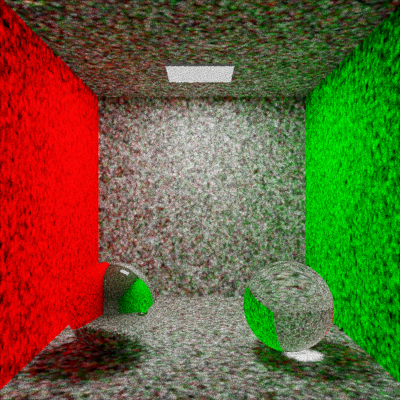

I also implemented a new algorithm which doesn't seem to improve the quality of the image that much, but makes the speed/space more controllable, and makes it save much more space anyway.

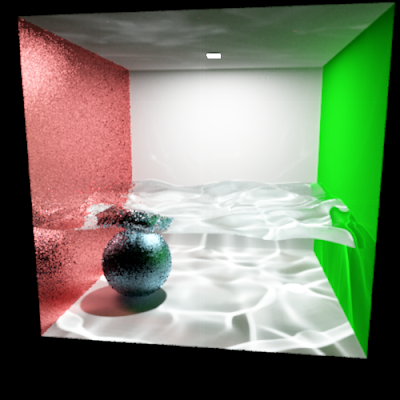

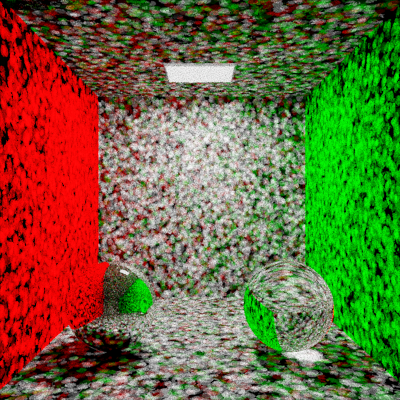

New algorithm:

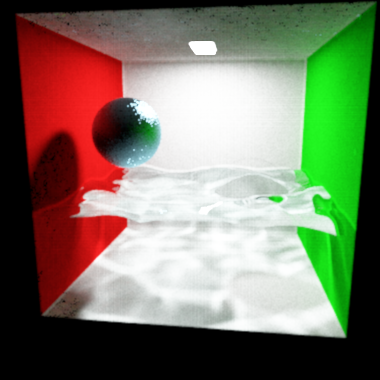

Old algorithm:

Both were rendered in approximately 20 minutes. The glossy reflections on the old algorithm are that much better because they have been oversampled by spawning 8 rays. This has not been done in the newer algorithm yet for simplicity's sake. Note how the diffuse darker areas on the new picture are much cleaner than they are on the old picture.

There is also a boolean object sphere in the water. I wanted to test if my implementation of boolean objects still worked, since they are the main reason the renderer is so slow, and also took me like a month to get working correctly. You can see it better in the old image, where the outline of the sphere is visible as darker than the rest of the white background. It is on the right side.

Next, I plan on adding a sort of importance sampling to the new one (where all its power really lies).

Saturday, October 31, 2009

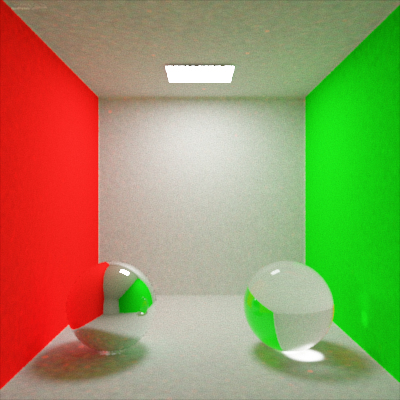

Stochastic Progressive Photon Mapping

It has been implemented. Also, the hashmap, which got me twice as many samples per second. The multithreading is broken with a race condition.

Unfortunitely, I can't seem to get the same results as the paper, with the glossy reflections converging even quicker than the diffuse surfaces. It seems as though my glossy reflections are converging really slow (but actually converging)

I do have a few new ideas which seem much simpler to implement than my previous voronoi cell based method (which was too complicated for me to bother spending the time not studying for school implementing) and thus fairly publishable.

Friday, July 3, 2009

Thursday, June 4, 2009

Progressive Photon Mapping 2.

(After about 15 million samples and 7 hours)

(After about 15 million samples and 7 hours)The code for the progressive photon mapping is finally written. For the same number of samples, the algorithm does look better than path tracing, but the sample speed is far worse. While I am not quite doing it in the same way as the paper describes, my method has the same, if not better big-O time per sample. A proof has yet to be done for that. Right off the bat I can think of a couple of optimizations: the first two nodes on the photon can be ignored and not added to the map, and the direct lighting can be computed explicitly. I say this because it seems as though this algorithm is much slower for direct lighting, where graininess and complex lighting is not a problem, and fails completely for antialiasing, which is only really important under direct lighting.

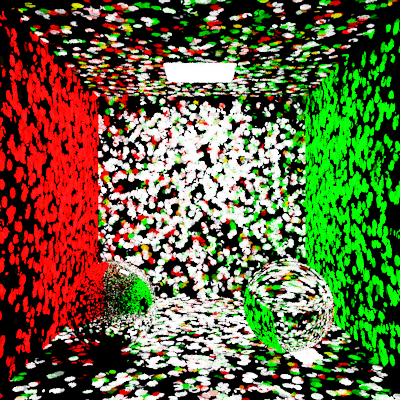

4000 samples:

17000 samples:

1million samples:

Wednesday, April 1, 2009

Tuesday, March 10, 2009

Subscribe to:

Posts (Atom)

I multiplied the speed by 10. now I get 15mill samples within half an hour.

I multiplied the speed by 10. now I get 15mill samples within half an hour.