I also implemented a new algorithm which doesn't seem to improve the quality of the image that much, but makes the speed/space more controllable, and makes it save much more space anyway.

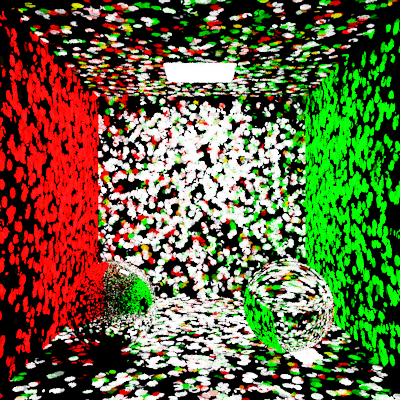

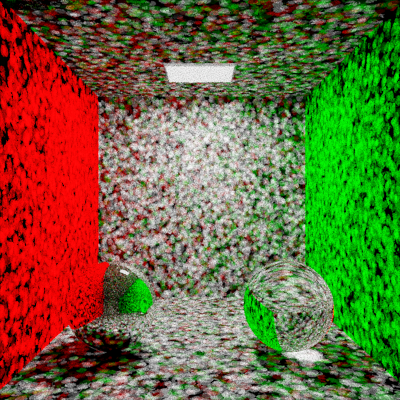

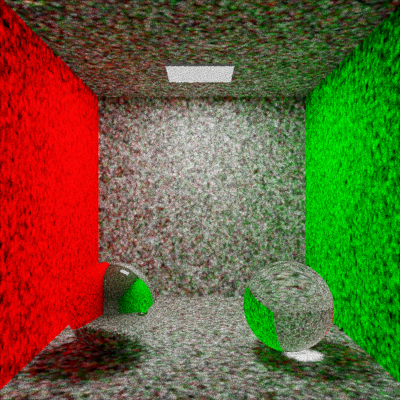

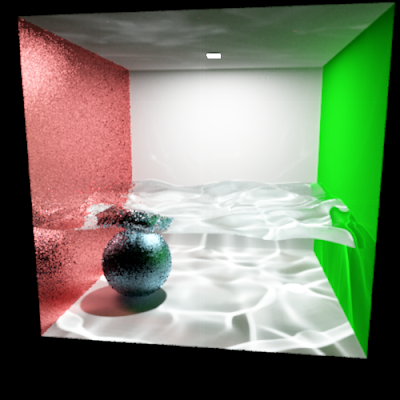

New algorithm:

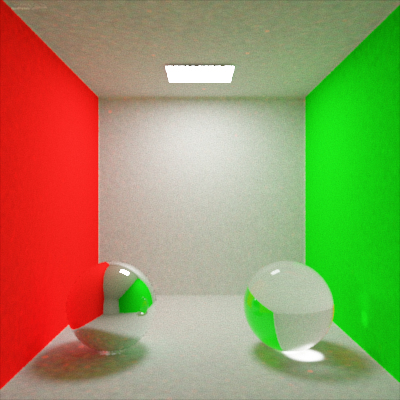

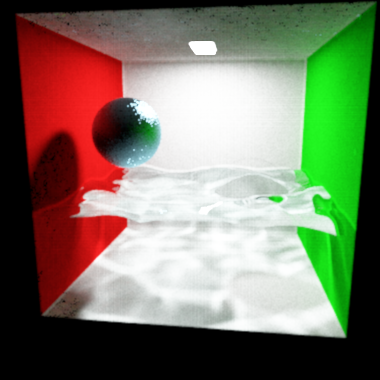

Old algorithm:

Both were rendered in approximately 20 minutes. The glossy reflections on the old algorithm are that much better because they have been oversampled by spawning 8 rays. This has not been done in the newer algorithm yet for simplicity's sake. Note how the diffuse darker areas on the new picture are much cleaner than they are on the old picture.

There is also a boolean object sphere in the water. I wanted to test if my implementation of boolean objects still worked, since they are the main reason the renderer is so slow, and also took me like a month to get working correctly. You can see it better in the old image, where the outline of the sphere is visible as darker than the rest of the white background. It is on the right side.

Next, I plan on adding a sort of importance sampling to the new one (where all its power really lies).

I multiplied the speed by 10. now I get 15mill samples within half an hour.

I multiplied the speed by 10. now I get 15mill samples within half an hour.